A/B Testing

Split test your Payment Recovery campaigns to discover which strategies work best for recovering failed payments and maximizing revenue retention. A/B testing enables you to make data-driven decisions about your recovery campaigns through controlled experiments with statistical confidence.

Overview

The A/B Testing feature for Payment Recovery allows you to create controlled experiments comparing different campaign variations to optimize recovery rates and revenue. By testing different approaches side-by-side, you can identify the most effective strategies for recovering failed payments from your customers.

When you create an A/B test, Churnkey automatically splits incoming failed payments between two campaign variations, tracks their performance, and provides detailed analytics with statistical significance testing to help you determine which variation performs better. This takes the guesswork out of campaign optimization and ensures your recovery efforts are as effective as possible.

The system uses rigorous statistical analysis with a 95% confidence level to determine whether differences between variations are meaningful or simply due to random chance. This means you can trust the results to guide important business decisions about your payment recovery strategy.

Metric Hierarchy and Statistical Foundation

Recovery Rate: Primary Statistical Metric

Recovery rate serves as the primary metric for all statistical analysis, significance testing, and winner determination in Churnkey's A/B testing engine. The statistical algorithms use recovery rate percentages to perform two-proportion z-tests and determine statistical significance at the 95% confidence level.

Revenue Amount: Secondary Business Context

Revenue data is tracked and displayed as secondary information for business impact assessment. While valuable for understanding financial implications, revenue metrics do not drive the core statistical analysis or winner determination process.

Why Recovery Rate is Primary: Recovery rate provides the most statistically reliable foundation for A/B testing because it represents a clear success/failure outcome for each campaign, enabling robust statistical significance testing across different sample sizes and campaign volumes.

Understanding A/B Testing Benefits

Payment Recovery A/B testing addresses several key challenges that businesses face when trying to optimize their failed payment campaigns. Without testing, you might wonder whether different email timing, messaging approaches, or offer strategies could improve your recovery rates. A/B testing provides definitive answers to these questions.

Recovery Rate Optimization: By testing different campaign approaches, you can identify which strategies lead to higher payment recovery rates. Recovery rate is the primary metric that drives all statistical analysis and winner determination, as even small improvements can translate to significant revenue gains over time.

Revenue Impact Analysis: The system tracks not just recovery rates but also the actual revenue recovered by each variation. This revenue data provides important business context and impact assessment, helping you understand the financial implications of each approach while the statistical engine focuses exclusively on recovery rate performance for determining test outcomes.

Customer Experience Insights: Different campaign variations may resonate differently with your customer base. A/B testing reveals which messaging, timing, and approach customers respond to most positively.

Risk Mitigation: Rather than implementing changes across all your recovery campaigns at once, A/B testing lets you validate new approaches on a subset of failed payments before broader rollout.

Setting Up Your First A/B Test

Creating an A/B test for Payment Recovery requires an existing segmented campaign. Primary campaigns cannot be A/B tested because they serve as the default fallback for all customers who don't match specific segments. This ensures your Payment Recovery system maintains complete coverage while allowing targeted testing.

Prerequisites for A/B Testing

Before creating an A/B test, ensure you meet these requirements:

- Segmented Payment Recovery campaign that's both published and active

- Campaign targets specific customer groups (subscription value, lifecycle stage, or billing history)

- Sufficient volume to detect meaningful improvements (typically requiring several thousand failed payments)

- Primary campaigns only (cannot be A/B tested as they serve as default fallback)

Volume Requirements: Your segment should generate consistent failed payment volume to reach statistical significance within a reasonable timeframe. Lower volumes will require longer test durations to achieve reliable results.

Creating the Test

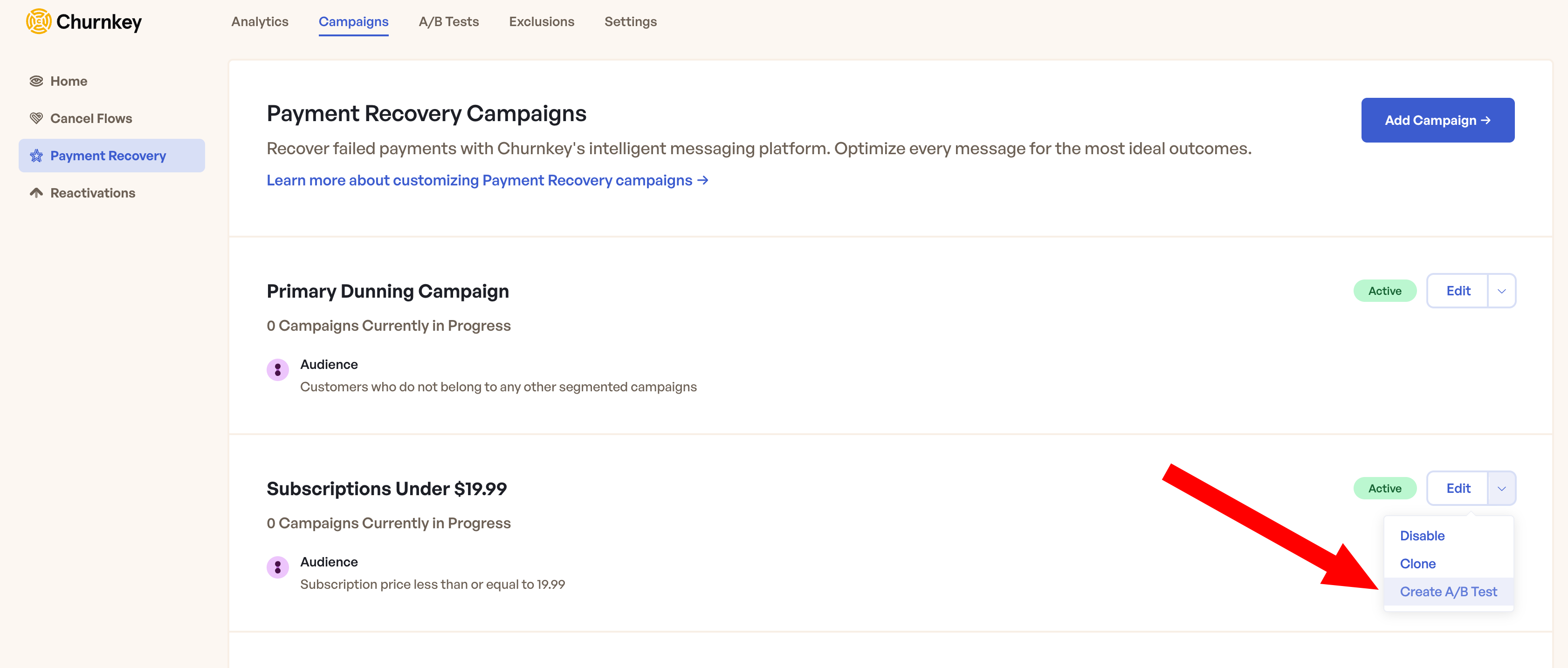

Follow these steps to create your A/B test:

- Navigate to your Payment Recovery campaigns

- Locate the segmented campaign you want to test

- Select "Create A/B Test" from the campaign's action menu

- Provide a descriptive name that clearly identifies what you're testing

The system automatically creates a duplicate of your original campaign, which becomes the second variation in your test.

Configuring Test Variations

Once created, you have two campaign variations: your original campaign (Variant A) and a newly created copy (Variant B). The copy initially matches your original campaign exactly, so you'll need to modify one or both variations to test different approaches.

Variation Configuration: Make the changes you want to test in one or both campaign variations. Common test variations include:

- Email timing adjustments

- Message content modifications

- Recovery attempt sequence alterations

Ensure both variations remain focused on the same customer segment to maintain test validity.

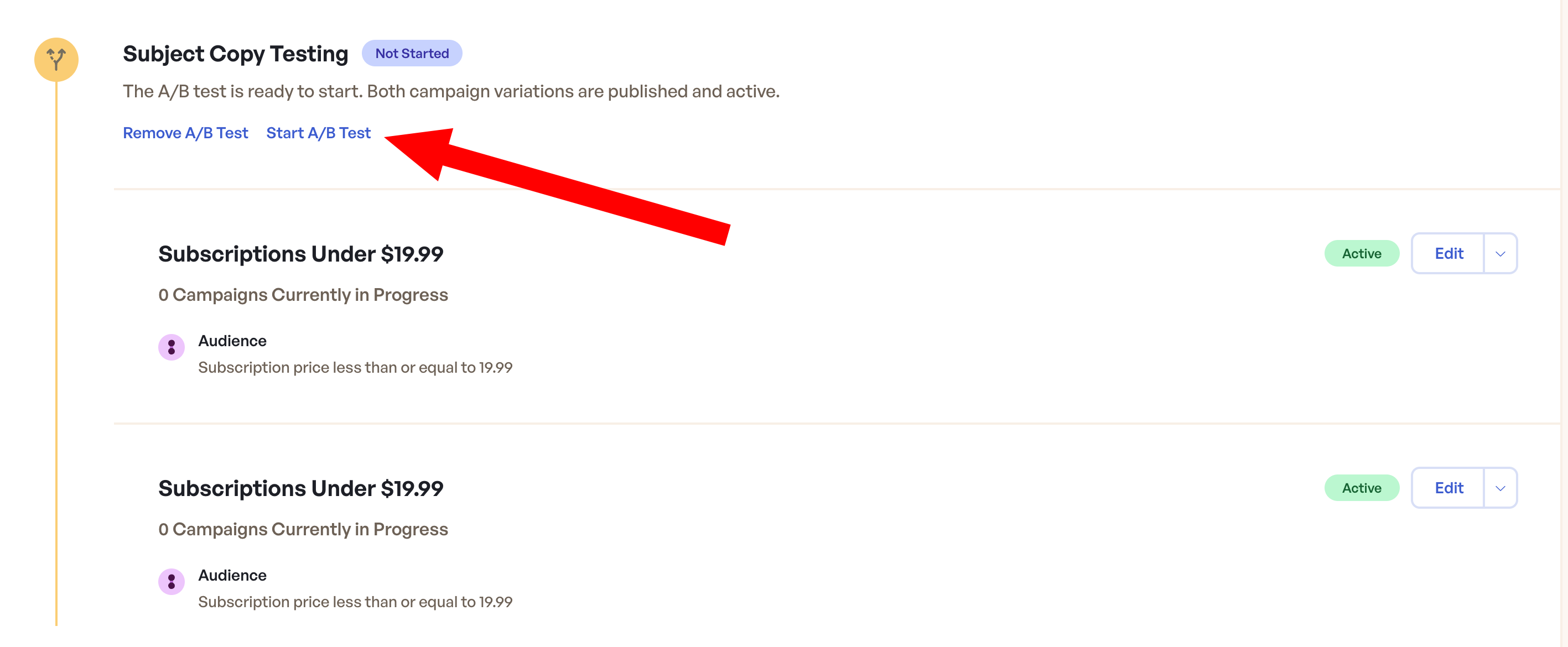

Publishing Requirements: Both campaign variations must be published and active before you can start the A/B test. The system will prevent test activation if either campaign has configuration issues.

Running Your A/B Test

Once both campaign variations are published and active, you can start your A/B test. The system begins automatically splitting incoming failed payments that match your segment between the two variations. This split is random and evenly distributed to ensure unbiased results.

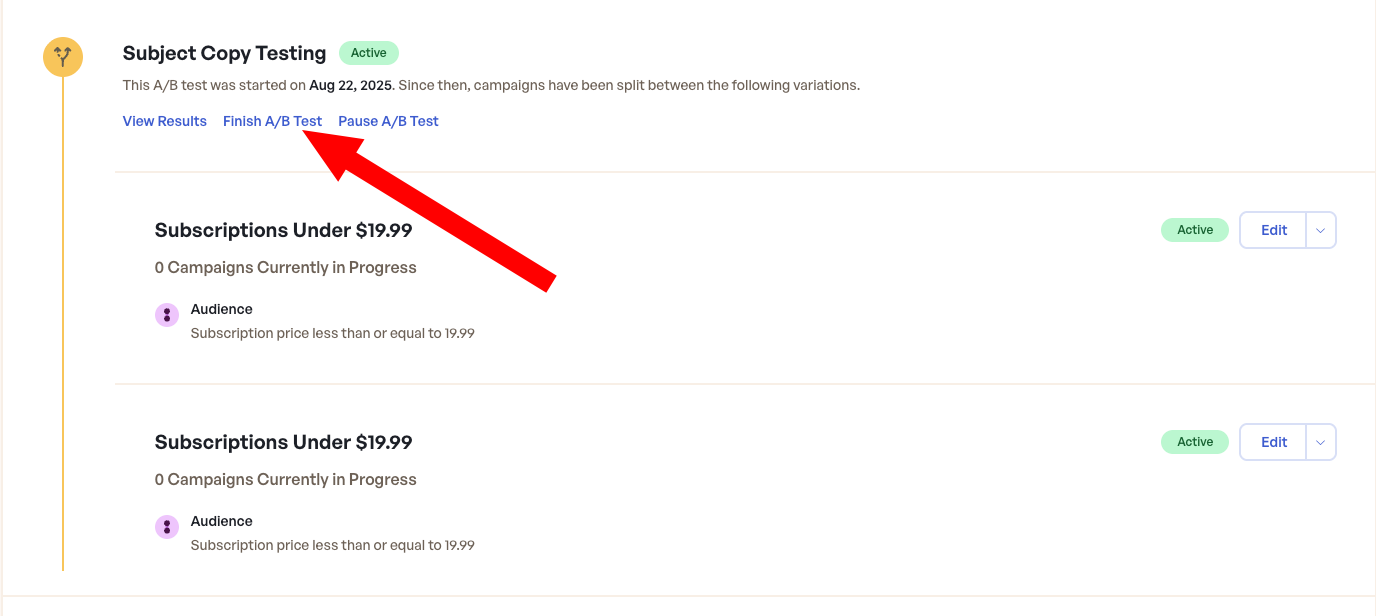

Test Activation and Management

Starting your test is straightforward through the campaign interface. Once activated, the system displays the test status as "Active" and shows when the test began. Failed payments matching your segment are randomly assigned to either Variant A or Variant B, ensuring each variation receives a representative sample.

Test Controls: While your test is running, you have several management options:

- Pause the test to make adjustments or temporarily halt the experiment

- Resume a paused test to continue data collection

- View Results to see the test current status

Duration Considerations: Allow sufficient time for meaningful data collection:

- Payment Recovery campaigns often take days or weeks to complete their full cycle

- You need enough completed campaigns to draw statistical conclusions

- Most tests require several weeks to months of data collection for reliable results

Monitoring Test Progress

The A/B test interface provides real-time monitoring of your test's progress. You can track how many campaigns have been assigned to each variation, how many are still active, and preliminary results as they develop. However, avoid making decisions based on early data that hasn't reached statistical significance.

Data Collection: The system tracks comprehensive metrics for each variation:

- Total campaigns triggered

- Number of campaigns that successfully recovered payments

- Recovery rates

- Total revenue recovered

This data feeds into the statistical analysis that determines test outcomes.

Understanding Your Results

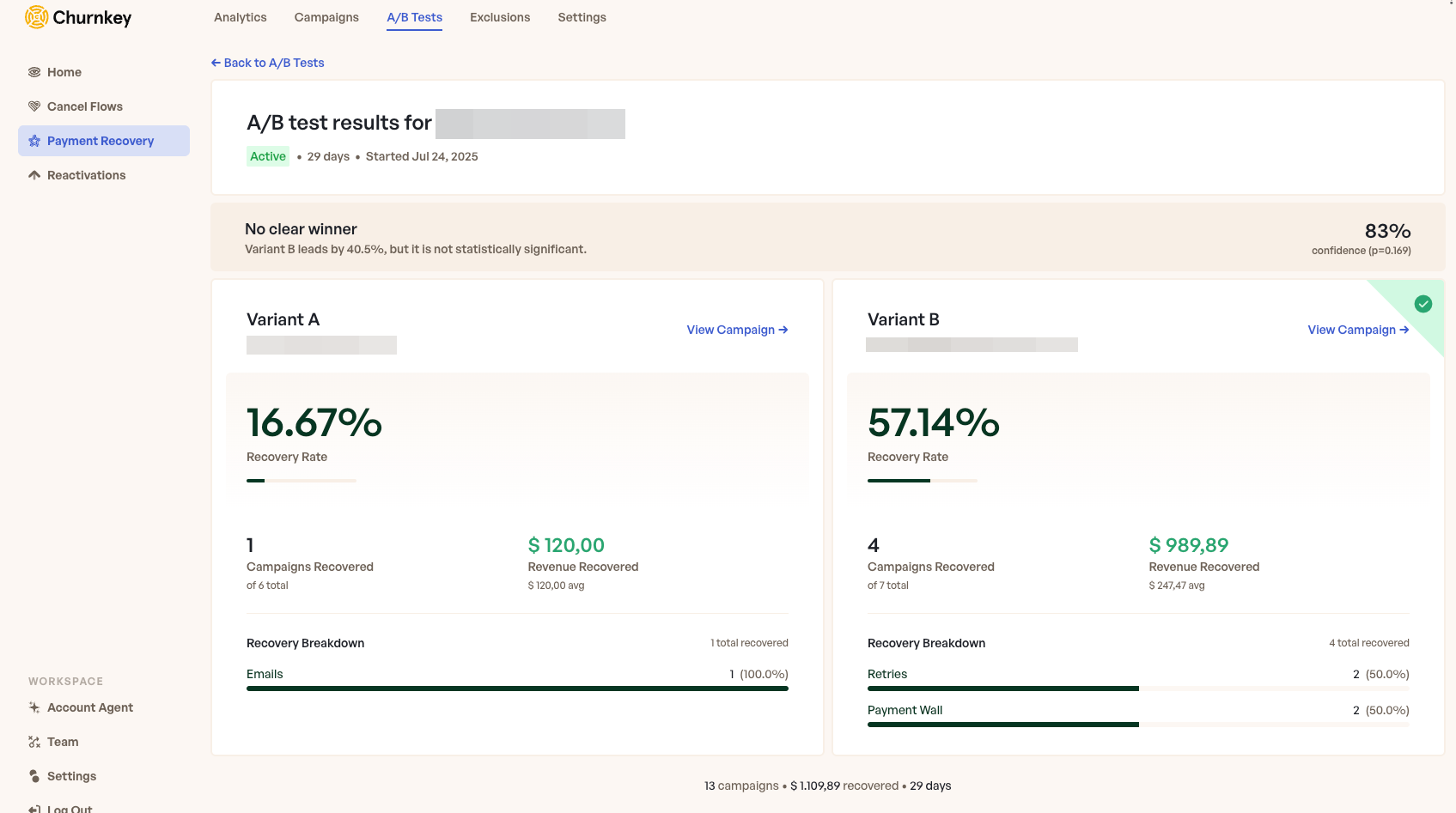

A/B test results provide detailed analytics comparing the performance of your two campaign variations. The results dashboard shows key metrics, statistical analysis, and actionable insights to guide your campaign optimization decisions.

Statistical Significance Analysis

The most important aspect of your results is the statistical confidence level, which indicates whether observed differences between variations are meaningful or likely due to random chance. Churnkey's A/B testing engine uses a two-proportion z-test exclusively on recovery rate data with a 95% confidence level to analyze results.

Statistical Engine Focus: The system performs all significance testing calculations using recovery rate percentages from each variation. Revenue amounts, while displayed for business context, do not participate in the statistical analysis or winner determination process.

Confidence Interpretation: When results show 95% or higher confidence based on recovery rate comparison, you can trust that the winning variation is genuinely better at recovering failed payments, not just lucky. Results below 95% confidence indicate the difference isn't statistically significant, meaning more data is needed or the variations perform similarly.

P-Value Understanding: The p-value shows the probability that the observed recovery rate difference occurred by chance. Values below 0.05 (corresponding to 95% confidence) indicate statistical significance. Lower p-values represent stronger evidence of a real difference between variations in their ability to recover failed payments.

Key Performance Metrics

The results dashboard presents several critical metrics for evaluating test performance:

- Recovery Rate: The percentage of campaigns that successfully recovered failed payments - this is the primary metric for statistical analysis and winner determination

- Revenue Recovered: The total dollar amount recovered by each variation - displayed for business context and impact assessment

- Campaign Volume: The number of failed payment campaigns assigned to each variation

- Recovery Breakdown: Detailed analysis of how payments were recovered (email responses, automated retries, payment wall usage, etc.)

Recovery rate serves as the primary statistical metric for optimization and winner determination, as it provides the most reliable basis for statistical significance testing. While revenue data is tracked and displayed for business impact analysis, the core A/B testing engine focuses on recovery rate percentage to determine which variation performs better statistically.

Winner Determination

When statistical significance is achieved, the system identifies a winning variation based on recovery rate performance and provides recommendations for next steps. The winner is determined by comparing recovery rate percentages using appropriate statistical testing to ensure the results are reliable.

Statistical Focus on Recovery Rate: The A/B testing engine performs its core analysis on recovery rate percentages, applying two-proportion z-tests to determine if differences between variations are statistically significant. While revenue amounts are displayed in the interface, they serve as additional business context rather than the primary decision criteria.

Clear Winners: When one variation achieves a significantly higher recovery rate with statistical confidence, the results clearly indicate which approach to adopt. The winning variation should be implemented more broadly in your Payment Recovery strategy.

No Clear Winner: Sometimes test results show no statistically significant difference in recovery rates between variations. This doesn't mean the test failed - it means both approaches recover payments at similar rates, giving you flexibility in which to use or suggesting you need to test more dramatically different approaches.

Advanced Test Analysis

Beyond basic winner determination, dive deeper into your test results to extract maximum insights for campaign optimization. Advanced analysis helps you understand not just which variation won, but why it performed better and how to apply those learnings.

Revenue Per Recovery Analysis

While recovery rate remains the primary statistical metric for winner determination, revenue per recovery provides valuable business context about recovery quality. Some campaign approaches might achieve higher recovery rates but for lower-value transactions, while others might recover fewer payments but of higher value.

Calculate the average revenue per recovery for each variation by dividing total revenue recovered by the number of successful recoveries. This metric helps evaluate whether a variation is particularly effective with high-value customers or smaller transactions, but should be used for business analysis rather than statistical winner selection, which is based on recovery rate performance.

Recovery Method Effectiveness

The results breakdown shows how payments were recovered through different methods (email engagement, automated retries, payment wall, SMS notifications). This analysis reveals which recovery mechanisms each variation optimizes and can guide future campaign design.

For example, one variation might excel at driving email engagement while another performs better with automated retry strategies. Understanding these patterns helps you design more targeted campaigns for different customer segments.

Temporal Performance Patterns

Examine how each variation performed over the test duration. Some campaigns might start strong but decline in effectiveness, while others might improve as customers become familiar with the messaging. These patterns inform decisions about campaign duration and refresh cycles.

Test Completion and Implementation

When your A/B test has collected sufficient data and achieved statistical significance, you can complete the test and implement the winning variation. Proper test completion ensures you capture maximum value from your testing efforts.

Finishing Your Test

Complete your A/B test through these steps:

- Select "Finish A/B Test" in the campaign interface

- Designate the winning variation based on your optimization goals

- System automatically implements the winner as the active campaign

- Losing variation is deactivated but preserved for reference

Winner Selection Considerations:

- Recovery rate is the exclusive primary metric used by the statistical engine for winner determination and significance testing

- Revenue data provides business context but does not influence the core statistical analysis or winner selection process

- Statistical Focus: The A/B testing engine performs all significance calculations based solely on recovery rate percentages

- Revenue per recovery metrics offer valuable insights for business impact assessment and implementation planning

- Customer experience impact and operational complexity as additional factors for post-test implementation

The winning variation automatically receives the highest priority in your campaign sequence, ensuring it becomes the primary handler for the customer segment.

Applying Learnings

Extract actionable insights from your test results to inform broader Payment Recovery strategy. Consider which elements of the winning variation contributed to its success and how those principles might apply to other campaigns.

Successful Elements: Identify specific components that drove the winner's superior performance:

- Email timing optimization

- Message tone effectiveness

- Recovery sequence design improvements

Document these findings for future campaign development.

Broader Implementation: Consider expanding successful elements to other campaign segments, but remember that different customer segments may respond differently, requiring additional testing.

Best Practices for Payment Recovery A/B Testing

Maximize the value of your A/B testing efforts by following proven best practices that ensure reliable results and actionable insights.

Test Design Principles

Follow these core principles for effective A/B testing:

- Single Variable Testing: Focus each test on one key variable to clearly understand what drives performance differences

- Meaningful Differences: Ensure variations represent meaningfully different approaches rather than minor tweaks

- Segment Consistency: Both variations should target the same customer segment for fair comparison

Testing multiple changes simultaneously makes it difficult to determine which element caused the observed results. Small tweaks like minor wording changes may not generate detectable differences, while larger strategic changes are more likely to produce clear winners.

Statistical Considerations

Ensure statistical validity with these requirements:

- Adequate Sample Size: Collect sufficient data for reliable statistical analysis

- Test Duration: Run tests for complete campaign cycles (typically 2-4 weeks per campaign) plus time to accumulate sufficient volume

- Confidence Thresholds: Results must achieve statistical confidence before making decisions

- Early Stopping: Avoid ending tests early based on preliminary results—this leads to false conclusions

Volume-Based Planning: Higher volume segments will reach statistical significance faster, while lower volume segments require longer test durations or broader targeting to achieve reliable results.

Effect Size Planning: Test variations that represent meaningfully different approaches rather than minor tweaks. Dramatic changes are more likely to produce detectable differences within reasonable timeframes.

Operational Excellence

Maintain operational excellence through these practices:

- Documentation: Keep detailed records of test hypotheses, configurations, and results

- Progressive Testing: Build a testing roadmap that systematically explores optimization opportunities

- Results Validation: Run follow-up tests to validate surprising or unexpected results

Documentation becomes valuable for future testing and helps avoid repeating unsuccessful experiments. Sequential testing allows continuous campaign performance improvement over time.

Troubleshooting Common Issues

Address common challenges that may arise during A/B test setup, execution, or analysis to ensure smooth testing operations.

Test Setup Problems

Cannot Create A/B Test:

- Issue: Trying to test a primary campaign rather than a segmented campaign

- Solution: Create a segmented campaign first, then set up your A/B test

- Note: Primary campaigns serve as the default for all unmatched customers and cannot be A/B tested

Test Won't Start: Ensure both campaign variations meet these requirements:

- Published and active status

- Valid email content

- Proper segment configuration

- No configuration errors

The system requires both variations to be fully configured and enabled before allowing test activation.

Data Collection Issues

No Results Showing:

- Cause: A/B tests require actual failed payment campaigns to generate data

- Solutions:

- Allow time for campaigns to be created and complete recovery cycles

- Check if your segment rarely triggers

- Verify test was recently started and needs more time

Uneven Split Between Variations:

- Normal: Short-term imbalances due to random assignment

- Concerning: Persistent skewing over extended periods

- Potential causes: Configuration issues or very low campaign volumes

Analysis Challenges

Results Never Reach Significance: Potential solutions:

- Run the test longer for larger sample sizes

- Expand the customer segment to increase volume

- Test more dramatically different approaches

- Verify variations perform differently enough to detect

Conflicting Metrics:

- Issue: One variation has higher recovery rate, another generates more revenue

- Statistical Solution: The A/B testing engine prioritizes recovery rate for winner determination, as this provides the most reliable statistical foundation

- Business Context: Use revenue data for impact assessment, but recovery rate remains the primary metric for test conclusions

- Decision Framework: Select the variation with statistically significant recovery rate improvement, then analyze revenue context for implementation planning

When troubleshooting issues persist, the A/B testing system includes detailed logging and diagnostic information. Review campaign assignment logs, check segment matching criteria, and verify that both variations are processing failed payments as expected. For complex issues, consider reaching out to support with specific test details and observed symptoms.

The Payment Recovery A/B testing system provides powerful tools for optimizing your failed Payment Recovery strategy through data-driven experimentation. By following these guidelines and best practices, you can systematically improve your recovery rates, maximize revenue retention, and create more effective customer experiences during the Payment Recovery process.